Making machines that learn

We’re solving AI’s memory problem. Letta builds agents that remember everything, learn continuously, and improve themselves over time.

agents that learn

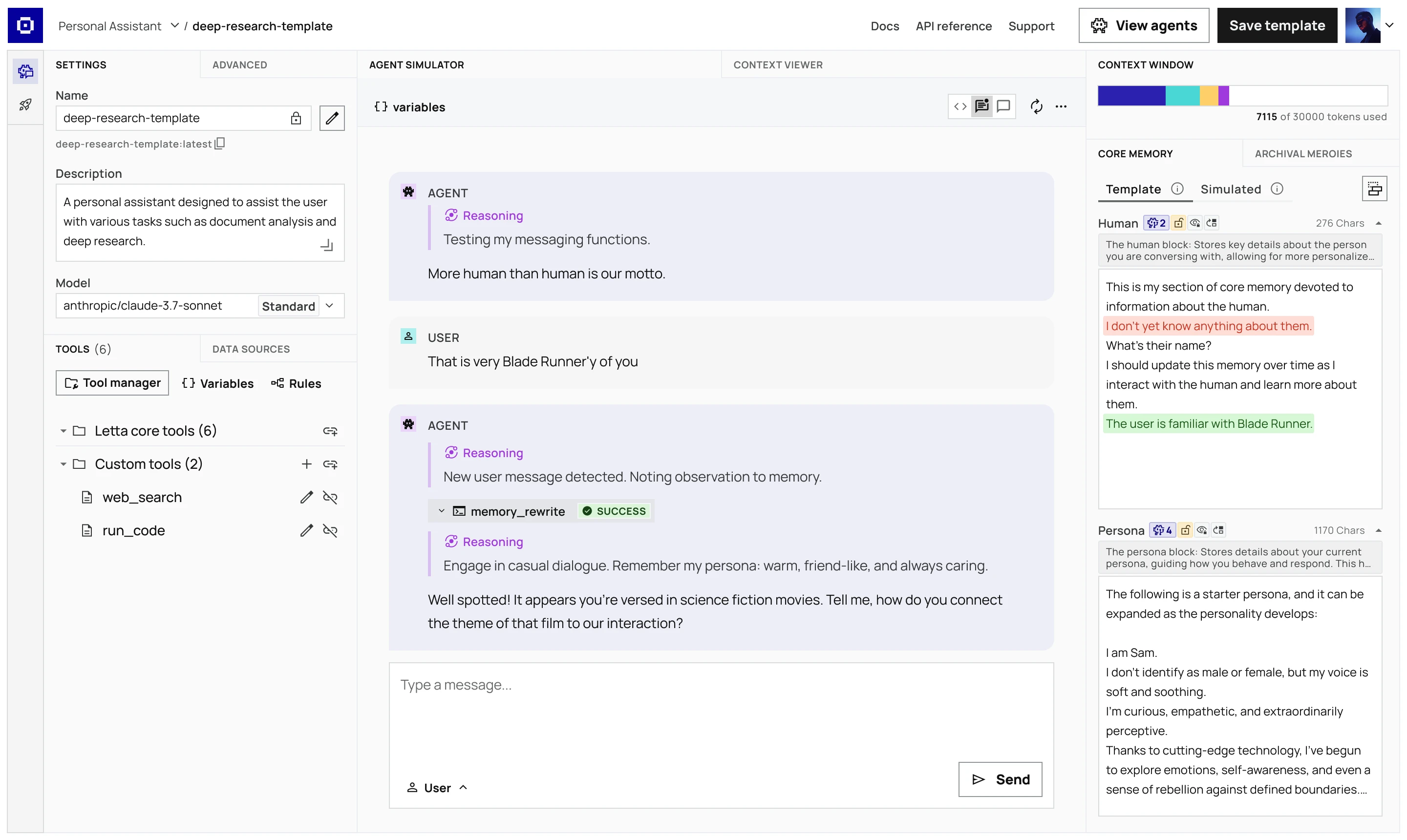

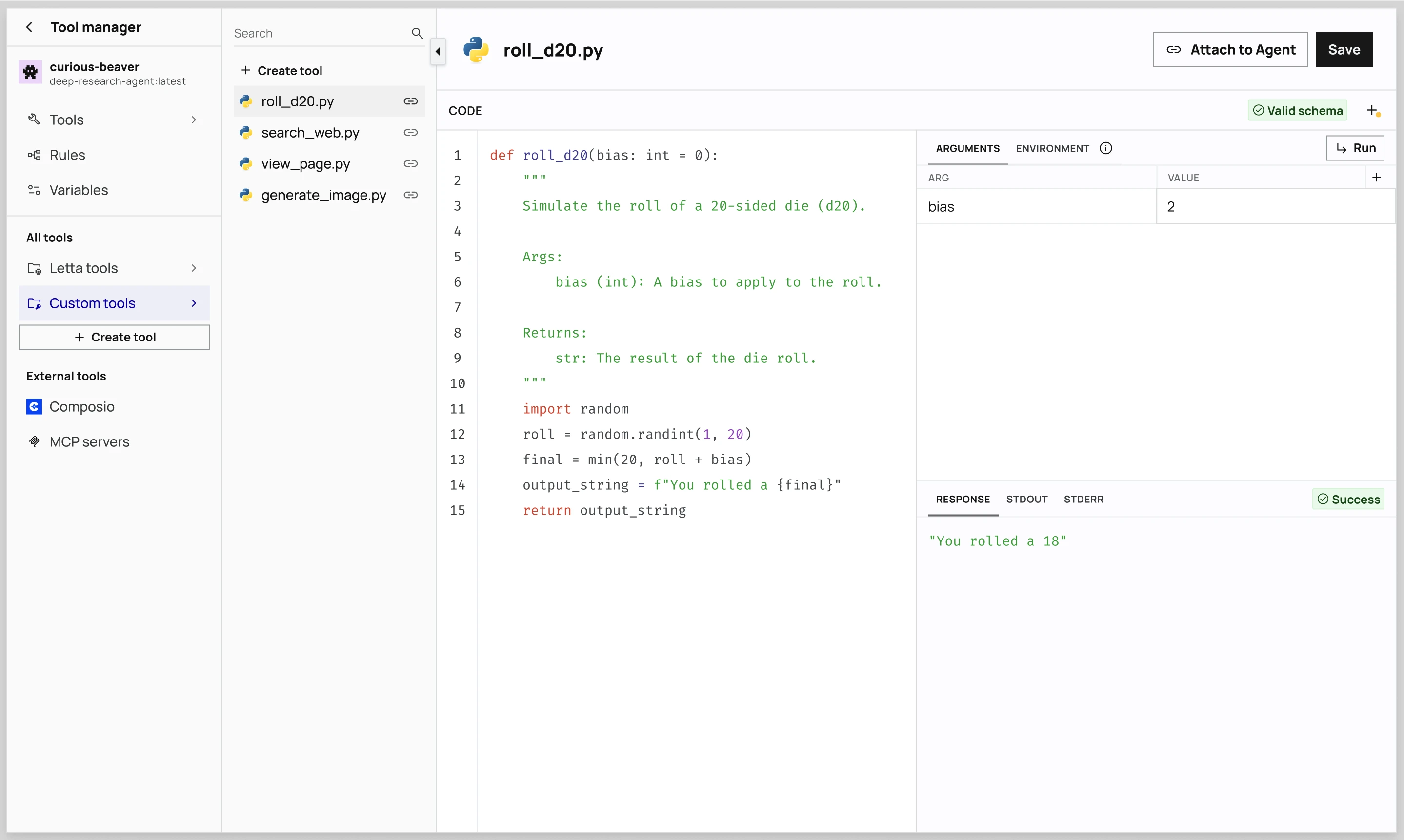

Developer Platform

Use the Letta API, ADE, and SDKs to add agentic context engineering and persistent state on top of any LLM provider.

Research

We are an open AI lab pursuing foundational research in AI memory, continual learning, and self-improvement.

BACKED BY RESEARCH, Trusted by developers

Today's AI agents struggle to remember previous mistakes, and are unable to learn from new experiences. The Letta Developer Platform lets you build long-running, stateful agents that can continually learn and self-improve.

Agents as APIs

Letta agents are exposed as a REST API endpoints, ready to be integrated into your applications - auth and identities included.

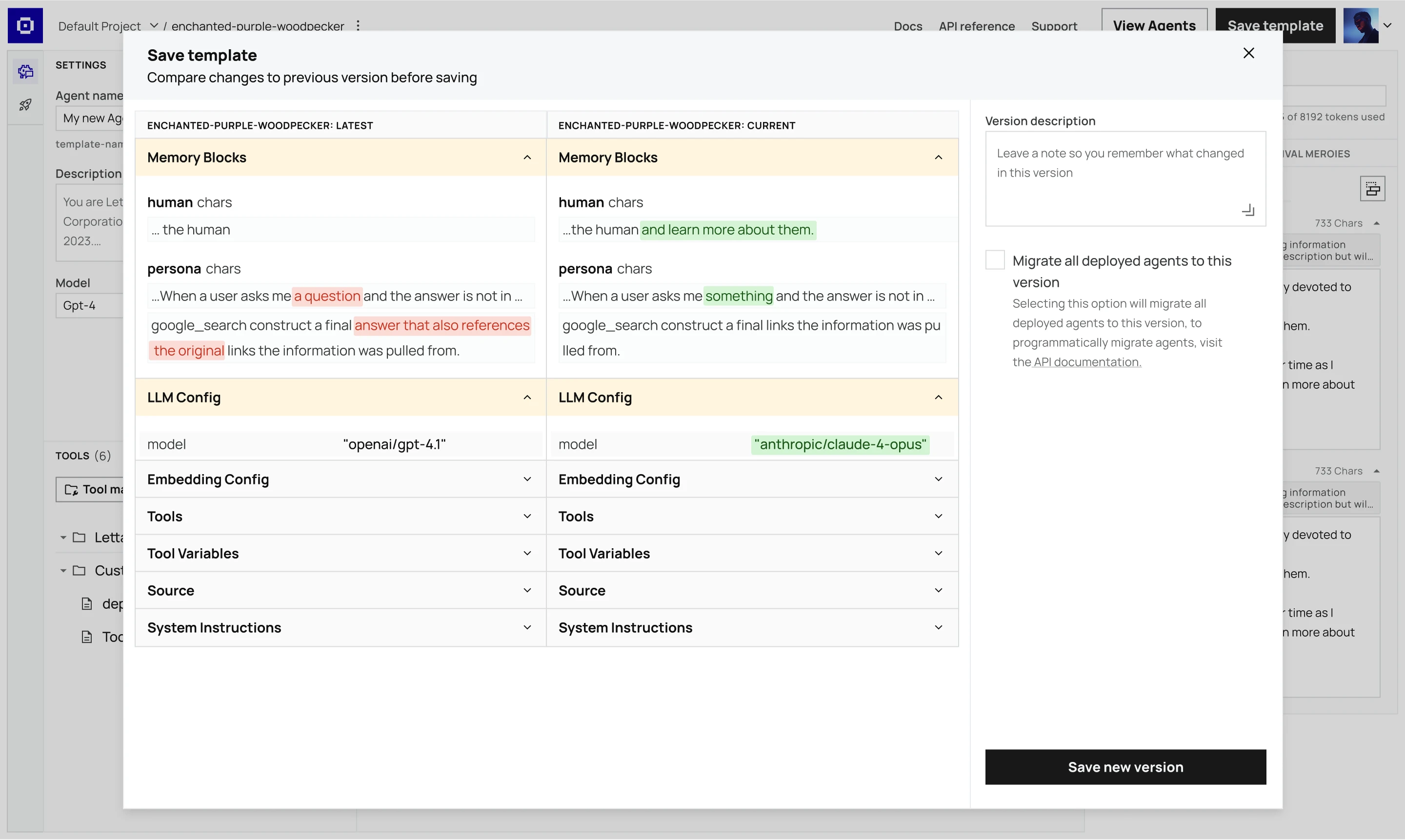

Stateful Agents

Letta persists all state automatically in a model-agnostic representation. Move agents between LLM providers without losing their memories.

Backed by Research

Letta manages context and memory with techniques designed by AI PhDs from UC Berkeley, including the creators of MemGPT.

Framework agnostic

Program your agents and connect them to your applications through Letta’s Agents API, SDKs, and framework integrations.

from letta_client import Letta

client = Letta(token="LETTA_API_KEY")

agent_state = client.agents.create(

model="openai/gpt-4.1",

embedding="openai/text-embedding-3-small",

memory_blocks=[

{

"label": "human",

"value": "The human's name is Chad. They like vibe coding."

},

{

"label": "persona",

"value": "My name is Sam, the all-knowing sentient AI."

}

]

)import { LettaClient } from '@letta-ai/letta-client'

const client = new LettaClient({ token: "LETTA_API_KEY" });

const agentState = await client.agents.create({

model: "openai/gpt-4.1",

embedding: "openai/text-embedding-3-small",

memoryBlocks: [

{

label: "human",

value: "The human's name is Chad. They like vibe coding."

},

{

label: "persona",

value: "My name is Sam, the all-knowing sentient AI."

}

]

});import { lettaCloud } from '@letta-ai/vercel-ai-sdk-provider';

import { generateText } from 'ai';

const { text } = await generateText({

model: lettaCloud('your-agent-id'),

prompt: 'Write a vegetarian lasagna recipe for 4 people.',

});import { LettaClient } from '@letta-ai/letta-client'

const client = new LettaClient({ token: "LETTA_API_KEY" });

const agentState = await client.agents.create({

model: "openai/gpt-4.1",

embedding: "openai/text-embedding-3-small",

memoryBlocks: [

{

label: "human",

value: "The human's name is Chad. They like vibe coding."

},

{

label: "persona",

value: "My name is Sam, the all-knowing sentient AI."

}

]

});import { LettaClient } from '@letta-ai/letta-client'

const client = new LettaClient({ token: "LETTA_API_KEY" });

const agentState = await client.agents.create({

model: "openai/gpt-4.1",

embedding: "openai/text-embedding-3-small",

memoryBlocks: [

{

label: "human",

value: "The human's name is Chad. They like vibe coding."

},

{

label: "persona",

value: "My name is Sam, the all-knowing sentient AI."

}

]

});Production-ready, proven at scale

Scale from prototypes to millions of agents, all on the same stack. Ensure that your data, state, and agent memories are safe from vendor lock-in.

Get started today

GitHub

Contribute to the Letta open source

Documentation

Learn how to build stateful agents

Discord

Join our developer Discord community

Stay up to date with Letta